What makes a good "regrant"?

Reviewing some of our favorite AI safety regrants - and some less good fits

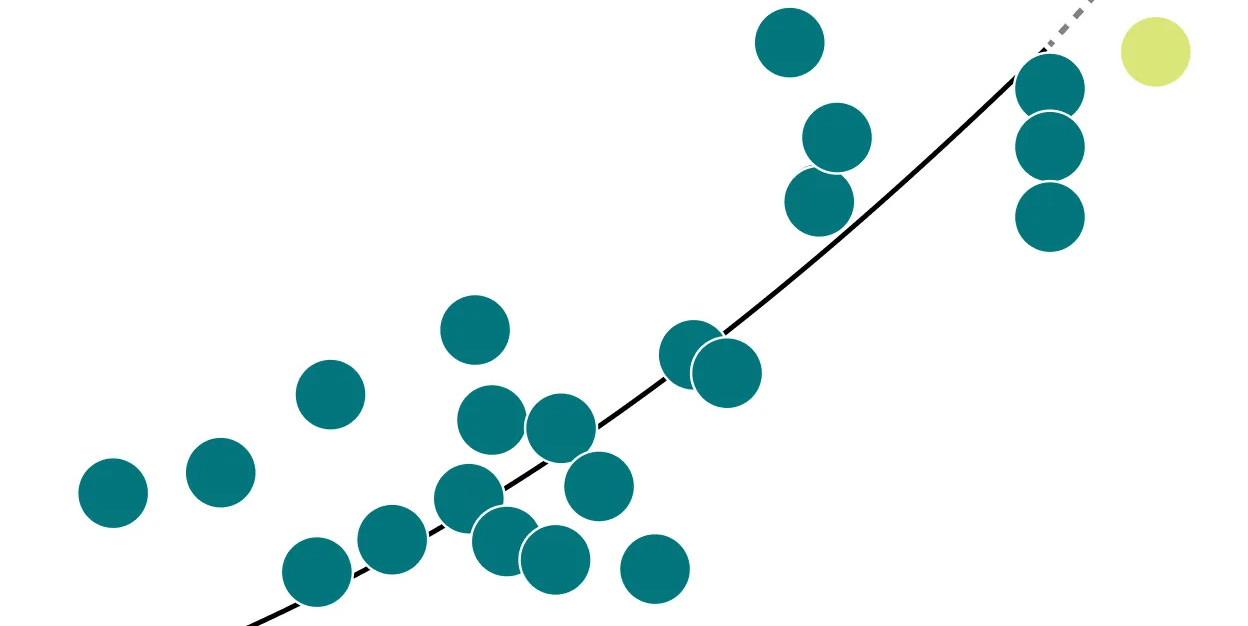

AI moves at a crazy pace. To keep up, AI safety needs to move fast, and that means funding has to move with it. Take Timaeus: our regrantors weren’t their biggest funders, but they were first, allowing Timaeus to start months earlier — and in a world of exponential curves, mere months matter.

This is why Manifund runs our regranting program: to quickly fund promising projects, by delegating budgets of $50K-$400K to experts. As we’ve just announced our 2025 regrantors, now is a good time to review past regrants -- some we think were great, and others that weren't such good fits.

We think our regranting program is great for donors who want to seed ambitious new projects, care about moving fast, and appreciate transparency. If you want to fund our 2025 program, please contact us!

About the author: Jesse Richardson recently joined Manifund after working at Mila - Quebec AI Institute, and also has a background in trading on prediction markets. This post is basically Jesse’s low-confidence, hot takes.

Three awesome AI Safety regrants…

What makes a great regrant? We look for early-stage projects that need quick funding, opportunities OpenPhil might miss, and chances to leverage our regrantors' unique expertise. Some of our favorites are:

Scoping Developmental Interpretability to Jesse Hoogland - the first funding for Timaeus, accelerating its research by months

Support for deep coverage of China and AI to ChinaTalk - reporting on DeepSeek, ahead of the curve

Shallow review of AI safety 2024 to Gavin Leech - quick regrants inducing further funding from OpenPhil & others

Scoping Developmental Interpretability - the first funding for Timaeus, accelerating research by months

This regrant was made in late 2023 to Jesse Hoogland and the rest of what is now the Timaeus team, for the purposes of exploring Developmental Interpretability (DevInterp) as a new AI alignment research agenda. Four regrantors made regrants to this project totalling $143,200: Evan Hubinger as the main funder, alongside Rachel Weinberg, Marcus Abramovitch and Ryan Kidd. Evan had previously mentored Jesse Hoogland as part of MATS, and therefore had additional context about the value of funding Hoogland’s future research. This is the sweet spot for regranting: donors may have the public information that Evan Hubinger is an expert who does good work, but regranting allows them to leverage his private information about other valuable projects, such as DevInterp.

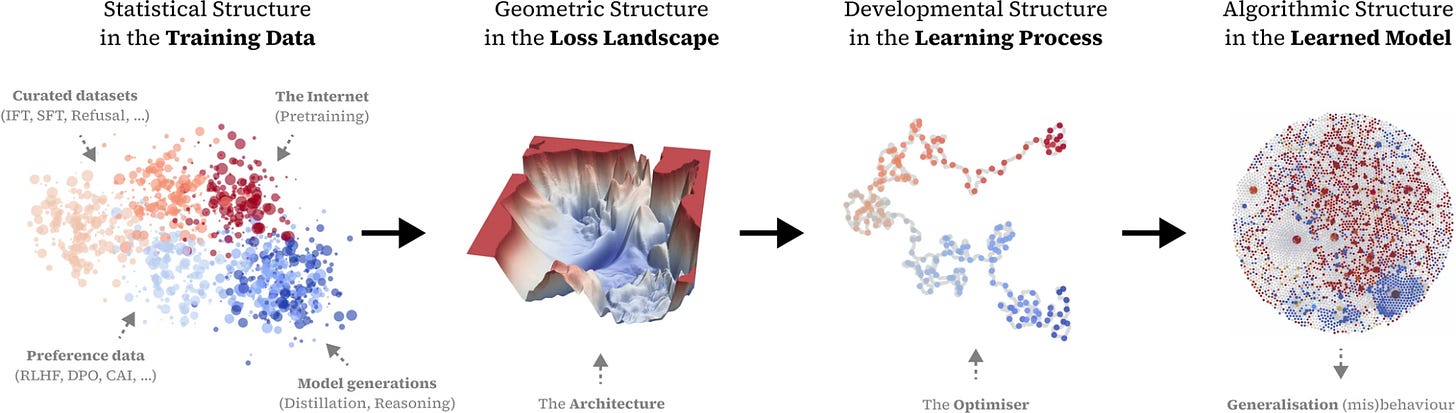

Regarding the grant itself; success for this project looked like determining whether DevInterp was a viable agenda to move forward with, rather than producing seminal research outputs. I recommend reading more about DevInterp if you’re interested, but my shallow understanding is that it aims to use insights from Singular Learning Theory (SLT) to make progress on AI alignment through interpretability, focusing on how phase transitions in the training process lead to internal structure in neural networks.

I’m not well placed to form an inside view on how likely DevInterp was/is to succeed, but this proposed research agenda had numerous things going for it:

it was novel; the application of SLT to alignment was largely unexplored prior to this work,

it seemed to be well thought out; the LessWrong write-up included plenty of detail about why we might expect phase transitions to be a big deal and how this would relate to alignment, as well as a solid six-month plan,

it had an element of “big if true” i.e., it may be unlikely that the strong version of the DevInterp thesis is true, but this research has potential to make meaningful progress on AI alignment if it is

These are all markers of projects I am excited to see funded through Manifund regranting. In addition to the agenda itself, I also think this was a good team to bet on for this kind of work; they seem capable and have relevant experience e.g. ML research, and running the 2023 SLT & Alignment Summit.

This regrant is a strong example of where Manifund’s regranting program can have the biggest impact: being early to support new projects & organizations, and thereby providing strong signals to other funders as well as some runway for these organizations to move quickly. In this case, Manifund’s early funding helped Hoogland’s team get off the ground, and they subsequently started a new organization (Timaeus) and received significantly more funding from other sources, such as $500,000 from the Survival & Flourishing Fund. It’s probable that they would’ve gotten this other funding regardless, but not guaranteed, and I’m happy that Manifund helped bring Timaeus into existence several months sooner and with increased financial security. Hoogland notes:

Getting early support from Manifund made a real difference for us. This was the first funding we received for research and meant that we could start months earlier than we otherwise would have. The fact that it was public meant other funders could easily see who was backing our work and why. That transparency helped us build momentum and credibility for developmental interpretability research when it was still a new idea. I'm pretty sure it played a significant role in us securing later funding through SFF and other grantmakers.

In terms of concrete outcomes, there’s a lot to be happy with here. Timaeus and its collaborators have published numerous papers on DevInterp since this regrant was made, and it seems that DevInterp’s key insight around the existence and significance of phase transitions has been validated. My sense is that the question of whether DevInterp is a worthwhile alignment research agenda to pursue has been successfully answered in the affirmative. It’s also nice to see strong outreach and engagement with the research community on the part of Timaeus: November 2023 saw the first DevInterp conference, and they’ve given talks at OpenAI, Anthropic, and DeepMind.

Support for Deep Coverage of China and AI - reporting on DeepSeek, ahead of the curve

In 2023 & 2024, Manifund regrantors Joel Becker and Evan Hubinger granted a total of $37,000 to ChinaTalk, a newsletter and podcast covering China, technology, and US-China relations. ChinaTalk has over 50,000 subscribers and is also notable for the quality of its coverage and the praise and attention it receives from elites and policymakers.

Before this regrant, ChinaTalk had been run by Jordan Schneider and Caithrin Rintoul, both part-time, on a budget of just $35,000/year. What they were able to accomplish in that time with those limited resources was impressive, and I believe merited additional funding, even just to allow Jordan to work on this full-time. More funding would also have meant ChinaTalk bringing on a full-time fellow who, per Jordan, “would be, to my knowledge, the only researcher in the English-speaking world devoted solely to covering China and AI safety.” ChinaTalk has since received further funding and is in the process of growing to five full-time employees, but we would’ve loved for this to happen sooner through an expanded regranting program.

Even putting aside the specific track record of ChinaTalk, it seems clear to me that the intersection of China and AI safety is an incredibly important area to cover, and at a high level it is valuable to fund organizations that are doing this kind of work. It can be hard to imagine plausible scenarios of how the next decade goes well with respect to AI that don’t run through US-China relations, and I am persuaded by Jordan’s case that the amount of energy currently being expended on this is grossly inadequate.

Since the first regrant, ChinaTalk’s Substack audience has grown from 26,000 subscribers to 51,000 and they’ve put out regular high-quality content, including an English translation of an interview with DeepSeek CEO Liang Wenfeng, coverage of chip policy, and what important 2024 elections in the US and Taiwan mean for China. The ChinaTalk team has expanded to six people, allowing for a greater diversity and quantity of coverage, including YouTube videos. Jordan has also announced plans for launching a think tank—ChinaTalk Institute—this year, in a similar vein to IFP.

Among their varied coverage, I was particularly impressed to see how ChinaTalk was ahead of the curve in covering the rise of DeepSeek, while most of the West seemed to be taken by total surprise in January 2025. As a trader and forecaster, this advance insight might have been worth a lot of money to me through anticipating the market freakout, suggesting I should pay more attention to ChinaTalk in the future.

ChinaTalk has continued on the strong trajectory it was on in late 2023, and it was great that Manifund was able to support ChinaTalk in this success. For more information about why this grant was likely good ex ante, I encourage you to look at regrantor Joel Becker’s comment on the subject. Joel’s detail about why ChinaTalk was at the time insufficiently funded

Philanthropists are scared to touch China, in part because of lack of expertise and in part for political reasons. Advertisers can be nervous for similar reasons… Jordan was hoping to support this work through subscriptions only.

makes me more optimistic that this regrant was the kind of thing the program should be doing: plugging holes in the funding landscape.

Shallow review of AI safety 2024 - quick regrants, nudging OpenPhil & others to donate

Gavin Leech co-wrote Shallow review of live agendas in alignment & safety in 2023, which was well-received and considered a useful resource for people looking to get a top-level picture of AI safety research. Given that it was intended to be a shallow review, this post has a lot of helpful detail and links for various research agendas, e.g., the amount of resources currently devoted to each, and notable criticisms.

Last year, he sought funding to create an updated 2024 version of this post. He received $9,000 from Manifund regrantors Neel Nanda and Ryan Kidd, as well as $12,000 from other donors through the Manifund site.

Big picture, I believe there should be an accessible and up-to-date resource of this kind; for people who are starting out in AI safety and don’t know anything, for funders trying to get a sense of the landscape, or for anyone else who might need it. In 2022 I was at a stage where I wanted to contribute to AI safety but didn’t know anything about it and was unsure where to start, and I would’ve likely found Gavin’s review useful, along with the other resources that existed. Based on this, Gavin’s record in a variety of fields, and the quality of the 2023 version, I think this regrant looked promising.

The new post (Shallow review of technical AI safety, 2024) came out in December 2024 and appears to be similarly comprehensive to the 2023 version, although it has gotten less attention (~half the upvotes on LessWrong and not curated). That’s probably a bit worse of an outcome than I would’ve hoped for, but I still would have endorsed this grant had I known the result in advance. Presumably the updated version is less eye-catching than the original, while still being necessary.

The funding of this project also shows the advantages of the Manifund regranting program. Gavin asked for between $8,000 (MVP version) and $17,000 (high-end version) and was quickly funded for the MVP by Neel and Ryan. He then got an additional $5,000 from OpenPhil, after Matt Putz learned about this proposal via our EA Forum post; and a further $12,000 from other donors. I am happy with how the regranting program is both able to provide the small amount of funding to get a project off the ground, and increase visibility of that project so that other donors can step in and fund it to a greater extent. A couple of small negatives: (1) regrantor Neel Nanda is less optimistic than I am that this was a particularly good grant and (2) the high-end version was supposed to include a “glossy formal report optimised for policy people” which didn’t get made (OpenPhil opted against funding it), however the excess money is instead going towards the 2025 edition. I look forward to it!

… And three that maybe weren’t a good fit for regranting

Pilot for new benchmark to EpochAI - a limited-info regrant that might have accelerated AI capabilities

AI Safety & Society to CAIS - cool new project on AI safety writing, less exciting for regranting specifically because the regrantor was also leading the project

General support to SaferAI - an org doing important work in AI policy, but might be growing too fast

Austin here. FWIW, we are very grateful for the regrantors for recommending these, and aren’t saying that these grants are bad, per se. In fact, I think some of these grantees are amazing and deserve lots more funding! We just think that the facts around these grants means that they’re not good examples of where Manifund’s regranting can be differentially good, compared to other mechanisms (such as LTFF or OpenPhil’s); or donors directly giving to these orgs, through Manifund or elsewise.

This matters because when we (and our donors) consider whether to run regranting, we want to see that the program is counterfactually moving funds towards projects that wouldn’t have them otherwise. In startup terms, we want to see regrants that are like angel investments in undervalued people and projects; the regrants below feel more like post-Series A top-ups. Even if the money is used well, the grant itself doesn’t signal much to the broader AI safety community; the quality of these orgs is already priced in. Now back to Jesse!

Pilot for new benchmark by Epoch AI - unfortunately confidential

This regrant consists of $200,000 from regrantor Leopold Aschenbrenner to Epoch AI to support the pilot of a new frontier AI benchmark. Epoch is a research organization that does some great work on forecasting AI progress, AI hardware, the economics of AI, and more. For this reason, they are by default a good candidate for funding through our regranting program. [Editor’s note: in fact, we’ve asked Tamay to serve as a regrantor for 2025!] However, there are a few reasons why I am less excited about this regrant than many of the other projects that could’ve been funded.

Firstly, this proposal presented very little information, for confidentiality purposes. While I understand why this was necessary, the lack of transparency is not ideal from Manifund’s perspective. Part of the value we hope potential grantees can get from the regranting program is increased awareness of their project, so that other funders can support them, and hopefully to improve understanding of what work is being done in AI safety. The lack of detail here makes that difficult.

Secondly, I think the program works best when regrantors give smaller amounts to many projects, compared with the (relatively) larger sum given to Epoch here. We generally want to be taking risks on getting smaller projects off the ground, with the hope that other funding sources can take over if/when their funding needs exceed what Manifund can offer. Part of our comparative advantage lies in the flexibility and speed of the regranting program, so I place more value on regrants that lean on that advantage. On the other hand, this is a pilot, so the proposal does fit the loose remit in that sense. I would also be happier about the relatively large grant if it came from multiple regrantors on the site (as with Timaeus), as that’s further evidence that a proposal is worth funding according to multiple different experts.

Thirdly, related to the above, Epoch has already received significant funding from OpenPhil and SFF, suggesting they wouldn’t benefit most from Manifund’s support. Aschenbrenner remarks that Epoch has other funding, but not for this project. I wonder whether that’s a signal that this project is not as valuable as Epoch’s other work, in the eyes of the Epoch leadership team and their previous funders?

Finally, while I highly value a lot of Epoch’s forecasting work, I think there is growing evidence that benchmarks of this kind help accelerate AI capabilities. The new reasoning-via-RL paradigm for frontier LLMs means that one of the biggest bottlenecks to AI progress is now high-quality reward signals to train on, which new benchmarks may assist in providing. This is evident in Epoch’s FrontierMath benchmark, which OpenAI commissioned and has sole access to (except for a holdout set). While the math nerd in me thinks FrontierMath is really cool, it seems to me that OpenAI thought creating this benchmark would be to their benefit, either through directly speeding up their progress or for marketing reasons. I find this concerning. We don’t know exactly whether this new benchmark would accelerate AI capabilities, or what the ownership structure of it would be, but that ultimately comes back to difficulties stemming from lack of transparency. I would be more confident about the positive value of this regrant if there were more information about this thorny question. There is significant disagreement about whether contributing to AI capabilities is in fact negative for the world, and I want to defer some to that uncertainty, but my personal view is that it does increase the probability of global catastrophe and I am therefore more pessimistic about this regrant. I would’ve preferred to see our regrantors fund a wider array of projects, as well as projects that are more transparent and ideally carry less downside risk.

AI Safety & Society - a direct donation may have made more sense

AI Safety & Society (AISS) was the proposed name for a new platform by the Centre for AI Safety (CAIS) designed to foster high-quality AI safety discourse, filling a gap between social media and formal academic publications. This platform launched two weeks ago, under a new name: AI Frontiers. It received a regrant of $250,000 from Manifund regrantor and CAIS Executive Director Dan Hendrycks, who is also advising the project and is now Editor-in-Chief of AI Frontiers.

There’s a lot here that I’m excited about: I agree with the need for more good AI safety writing, and ensuring that AI safety ideas reach a broader audience. I also trust Dan and the CAIS team to execute this well, and they have a lot of the right contacts and experience to get this off the ground. Looking at the articles published on AI Frontiers in the last two weeks, I’m impressed with the quality and scope.

Despite the positives, I’m unconvinced this was a great outcome for the Manifund regranting program, because it involved a regrantor using their budget to support their own organization’s project. To be clear, I think this was a reasonable use of money. It makes sense that Dan Hendrycks’ reasons for supporting this project professionally also lead him to think it’s a good place to direct funding. Yet this doesn’t strike me as making valuable use of the regranting program, compared with other funding mechanisms. Consider the two main ways a hypothetical donor might want to defer to Hendrycks’ judgment as an AI safety expert:

they might specifically value the work he’s doing with CAIS and projects such as AI Frontiers, or

they might value his ability to discover and rate AI safety work more generally

The former situation should probably lead that donor to just donate to CAIS directly; the latter is a case that the regranting program was specifically designed to assist with. This regrant feels more like the former, which I don’t think is a problem in itself, but I would feel less optimistic about continuing the regranting program if this is the main way in which it was used. That being said, I hope this project succeeds and if AI Frontiers does have a big positive impact, I might end up thinking that the specifics of how it got funded were less important than getting it funded at all.

This post should not be seen as knocking AI Frontiers— a nascent project that could be highly impactful— but rather articulating a view on the best use cases for regranting. Looking forward, I wouldn’t want Manifund regrantors to rule out funding projects they’re involved in, but such a decision should perhaps merit some extra skepticism about whether this is the best use of a regrantor budget.

[Editor’s note: we reached out to Dan about this grant, and he disputes this inclusion. In particular, he notes that AI Frontiers has only been out for a couple weeks, and that his previous grant to the WMDP benchmark would make a better example, as that grant has similar characteristics but has had more time to stand on its merits.]

General support for SaferAI - growing too fast?

This $100,000 regrant was made by Adam Gleave to SaferAI, an organization that conducts research on AI risk management. Similar to Epoch AI and CAIS, I have confidence that SaferAI is doing good work , such as their ‘risk management maturity’ ratings for leading AI labs, featured in TIME Magazine.

Adam Gleave continues to support the grant, and is excited about further support to SaferAI:

They’ve done good work, and are one of really very very few policy-focused orgs in the EU that have gained any traction there. They’re the only one I can think of in France, which is such a key actor.

They can’t take [OpenPhil] money. This really limits the number of other sources.

However, I am concerned that SaferAI's leadership team is relatively inexperienced for their rate of growth; a concern shared in Adam’s comments and SaferAI’s proposal. They intend to grow and take on further projects in a way that might require a lot of coordination and administrative overhead—tasks the leadership may not be fully equipped to handle. I am not personally familiar with the SaferAI team, so I’m taking this as given based on what these guys have written, but it seems to me that this may limit the usefulness of additional funding to SaferAI at this point in time. In other words, SaferAI seems like an organization that may be bottlenecked along multiple axes (funding, experience, organizational capacity) such that fixing one bottleneck alone is less valuable than making grants towards another effort that is solely bottlenecked by funding. It may be that SaferAI was not a strong candidate for a Manifund regrant at the time (February 2025) but will be in the future as their ability to usefully absorb more funding increases.

On the other hand, one of the ways that this grant may be used is to allow Siméon, the Executive Director, to start drawing a salary, which seems a more straightforwardly good use of money, assuming he doesn’t have perpetual funding from another source. It’s the bringing on of additional (junior) staff that gives me more pause, although I must stress that my judgment here is low-confidence given my limited information on SaferAI’s capacity for expansion.

Beyond the organizational capacity issues, I also note that SaferAI has “multiple institutional and private funders from the wider AI safety space,” which, while certainly not disqualifying for a regrant, is another reason this regrant may be lower value than many others made through this program.

Finally, I commend SaferAI for the 'What are the most likely causes and outcomes if this project fails? (premortem)' section of their proposal, which was especially helpful for thinking about the value of this grant. I imagine it can be quite easy to write a premortem that doesn’t really get to the nub of a project’s potential downsides. I hope to see SaferAI continue to have positive impact and receive support from the AI safety community, but given their other limitations, I am not sure it was the best fit for the regranting program at this stage.

Austin again. I’m seeing some common patterns with these examples:

A large regrant ($100k+),

Made to established orgs with lots of money already,

By a single regrantor,

Who is extraordinarily busy

So these are heuristics for regrantors to keep in mind; and for Manifund to think about when designing our future program.

That said, I don’t think that pattern-matching to one of these heuristics is necessarily bad! Because:

Sometimes it’s actually right for a regrantor spend all their money on a single bet. This year especially, Manifund is diversifying the program with more regrantors, across more fields, with smaller per-person budgets

Sometimes orgs with good track records will have a lot of funding, but can still use the marginal $ better than a new org

Most regrants are made by a single regrantor; it’s rare to get contributions from multiple regrantors, or the general public.

In fact, I remember a bit of “regrantor game of chicken” happening with orgs like Timaeus and Apollo — everyone thought they were obviously good, but it wasn’t clear who should spend their budget on it. IMO, funders should think like angel investors — getting early “allocation” into good projects is good!

Regrantors who are busy are generally busy for good reason: they’re competent & well connected. As the saying goes, “If you want something done, give it to a busy person.”

Also, it’s good for Manifund to have famous (aka busy) people associated with the regranting program — it makes fundraising easier for us, and makes the program seem more legit. But we don’t want to overweight this; we definitely want regrantors who will actually make good grants too.

So overall, it’s really hard to say 🤷. Making good grants is a hard problem, one we’re always trying to get better at. Once again, we’re very grateful to every one of our regrantors for finding and funding the opportunities that they feel will most help the world!

I'm so honored by this write up! I'm so glad I've done you guys proud and really want to thank Joel, Evan, Austin and the rest of the Manifund team for setting up this platform and taking a chance on me and China + AI analysis.